Chapter 14 Comparing several means (one-way ANOVA)

This chapter introduces one of the most widely used tools in statistics, known as “the analysis of variance”, which is usually referred to as ANOVA. The basic technique was developed by Sir Ronald Fisher in the early 20th century, and it is to him that we owe the rather unfortunate terminology. The term ANOVA is a little misleading, in two respects. Firstly, although the name of the technique refers to variances, ANOVA is concerned with investigating differences in means. Secondly, there are several different things out there that are all referred to as ANOVAs, some of which have only a very tenuous connection to one another. Later on in the book we’ll encounter a range of different ANOVA methods that apply in quite different situations, but for the purposes of this chapter we’ll only consider the simplest form of ANOVA, in which we have several different groups of observations, and we’re interested in finding out whether those groups differ in terms of some outcome variable of interest. This is the question that is addressed by a one-way ANOVA.

The structure of this chapter is as follows: In Section 14.1 I’ll introduce a fictitious data set that we’ll use as a running example throughout the chapter. After introducing the data, I’ll describe the mechanics of how a one-way ANOVA actually works (Section 14.2) and then focus on how you can run one in R (Section 14.3). These two sections are the core of the chapter. The remainder of the chapter discusses a range of important topics that inevitably arise when running an ANOVA, namely how to calculate effect sizes (Section 14.4), post hoc tests and corrections for multiple comparisons (Section 14.5) and the assumptions that ANOVA relies upon (Section 14.6). We’ll also talk about how to check those assumptions and some of the things you can do if the assumptions are violated (Sections 14.7 to 14.10). At the end of the chapter we’ll talk a little about the relationship between ANOVA and other statistical tools (Section 14.11).

14.1 An illustrative data set

Suppose you’ve become involved in a clinical trial in which you are testing a new antidepressant drug called Joyzepam. In order to construct a fair test of the drug’s effectiveness, the study involves three separate drugs to be administered. One is a placebo, and the other is an existing antidepressant / anti-anxiety drug called Anxifree. A collection of 18 participants with moderate to severe depression are recruited for your initial testing. Because the drugs are sometimes administered in conjunction with psychological therapy, your study includes 9 people undergoing cognitive behavioural therapy (CBT) and 9 who are not. Participants are randomly assigned (doubly blinded, of course) a treatment, such that there are 3 CBT people and 3 no-therapy people assigned to each of the 3 drugs. A psychologist assesses the mood of each person after a 3 month run with each drug: and the overall improvement in each person’s mood is assessed on a scale ranging from to .

With that as the study design, let’s now look at what we’ve got in the data file:

load( "./rbook-master/data/clinicaltrial.Rdata" ) # load data

str(clin.trial) ## 'data.frame': 18 obs. of 3 variables:

## $ drug : Factor w/ 3 levels "placebo","anxifree",..: 1 1 1 2 2 2 3 3 3 1 ...

## $ therapy : Factor w/ 2 levels "no.therapy","CBT": 1 1 1 1 1 1 1 1 1 2 ...

## $ mood.gain: num 0.5 0.3 0.1 0.6 0.4 0.2 1.4 1.7 1.3 0.6 ...So we have a single data frame called clin.trial, containing three variables; drug, therapy and mood.gain. Next, let’s print the data frame to get a sense of what the data actually look like.

print( clin.trial )## drug therapy mood.gain

## 1 placebo no.therapy 0.5

## 2 placebo no.therapy 0.3

## 3 placebo no.therapy 0.1

## 4 anxifree no.therapy 0.6

## 5 anxifree no.therapy 0.4

## 6 anxifree no.therapy 0.2

## 7 joyzepam no.therapy 1.4

## 8 joyzepam no.therapy 1.7

## 9 joyzepam no.therapy 1.3

## 10 placebo CBT 0.6

## 11 placebo CBT 0.9

## 12 placebo CBT 0.3

## 13 anxifree CBT 1.1

## 14 anxifree CBT 0.8

## 15 anxifree CBT 1.2

## 16 joyzepam CBT 1.8

## 17 joyzepam CBT 1.3

## 18 joyzepam CBT 1.4For the purposes of this chapter, what we’re really interested in is the effect of drug on mood.gain. The first thing to do is calculate some descriptive statistics and draw some graphs. In Chapter 5 we discussed a variety of different functions that can be used for this purpose. For instance, we can use the xtabs() function to see how many people we have in each group:

xtabs( ~drug, clin.trial )## drug

## placebo anxifree joyzepam

## 6 6 6Similarly, we can use the aggregate() function to calculate means and standard deviations for the mood.gain variable broken down by which drug was administered:

aggregate( mood.gain ~ drug, clin.trial, mean )## drug mood.gain

## 1 placebo 0.4500000

## 2 anxifree 0.7166667

## 3 joyzepam 1.4833333aggregate( mood.gain ~ drug, clin.trial, sd )## drug mood.gain

## 1 placebo 0.2810694

## 2 anxifree 0.3920034

## 3 joyzepam 0.2136976Finally, we can use plotmeans() from the gplots package to produce a pretty picture.

library(gplots)

plotmeans( formula = mood.gain ~ drug, # plot mood.gain by drug

data = clin.trial, # the data frame

xlab = "Drug Administered", # x-axis label

ylab = "Mood Gain", # y-axis label

n.label = FALSE # don't display sample size

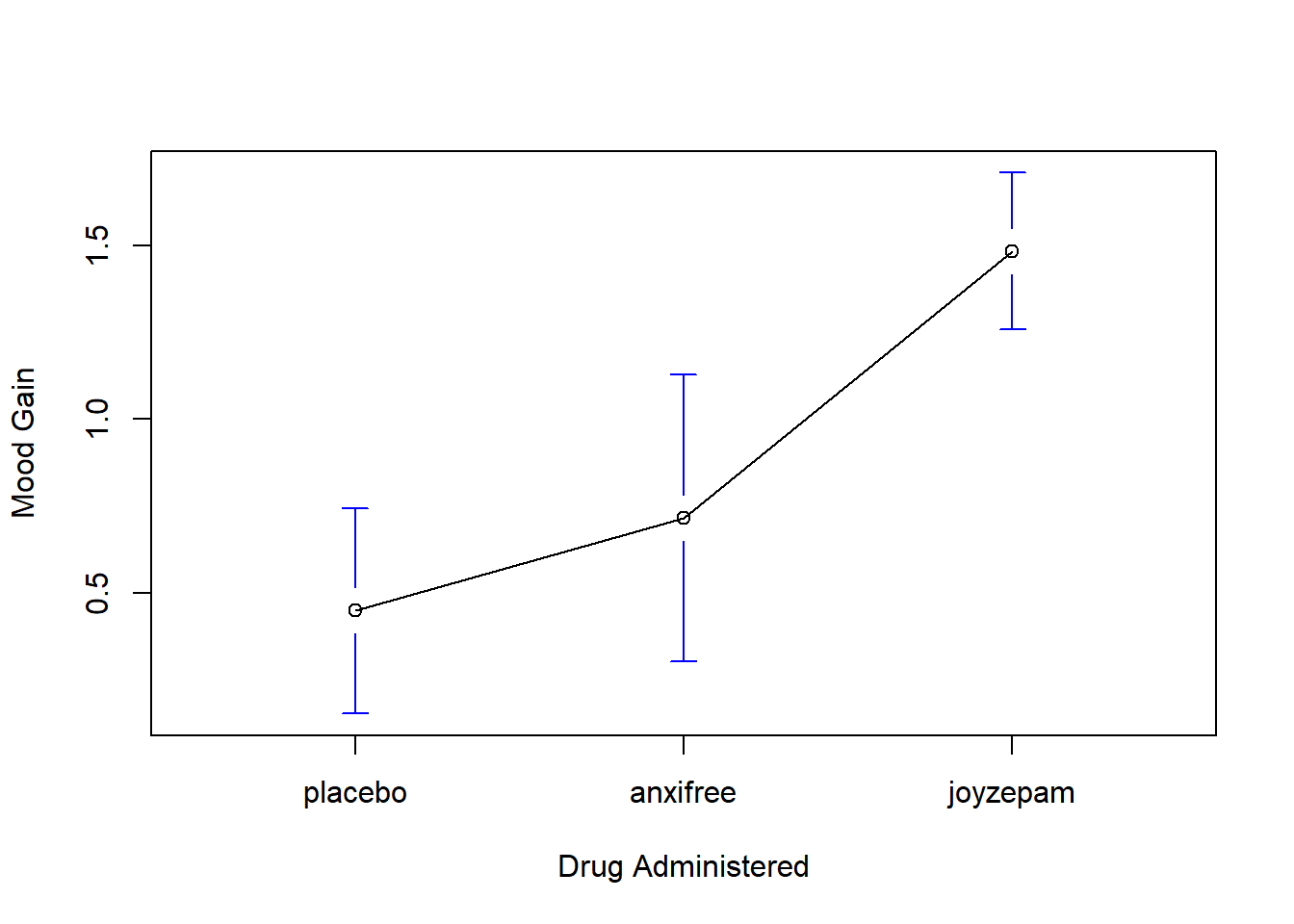

)The results are shown in Figure 14.1, which plots the average mood gain for all three conditions; error bars show 95% confidence intervals. As the plot makes clear, there is a larger improvement in mood for participants in the Joyzepam group than for either the Anxifree group or the placebo group. The Anxifree group shows a larger mood gain than the control group, but the difference isn’t as large.

The question that we want to answer is: are these difference “real”, or are they just due to chance?

## Warning: package 'gplots' was built under R version 3.5.2##

## Attaching package: 'gplots'## The following object is masked from 'package:stats':

##

## lowess

Figure 14.1: Average mood gain as a function of drug administered. Error bars depict 95% confidence intervals associated with each of the group means.

14.2 How ANOVA works

In order to answer the question posed by our clinical trial data, we’re going to run a one-way ANOVA. As usual, I’m going to start by showing you how to do it the hard way, building the statistical tool from the ground up and showing you how you could do it in R if you didn’t have access to any of the cool built-in ANOVA functions. And, as always, I hope you’ll read it carefully, try to do it the long way once or twice to make sure you really understand how ANOVA works, and then – once you’ve grasped the concept – never ever do it this way again.

The experimental design that I described in the previous section strongly suggests that we’re interested in comparing the average mood change for the three different drugs. In that sense, we’re talking about an analysis similar to the -test (Chapter 13, but involving more than two groups. If we let denote the population mean for the mood change induced by the placebo, and let and denote the corresponding means for our two drugs, Anxifree and Joyzepam, then the (somewhat pessimistic) null hypothesis that we want to test is that all three population means are identical: that is, neither of the two drugs is any more effective than a placebo. Mathematically, we write this null hypothesis like this: As a consequence, our alternative hypothesis is that at least one of the three different treatments is different from the others. It’s a little trickier to write this mathematically, because (as we’ll discuss) there are quite a few different ways in which the null hypothesis can be false. So for now we’ll just write the alternative hypothesis like this: This null hypothesis is a lot trickier to test than any of the ones we’ve seen previously. How shall we do it? A sensible guess would be to “do an ANOVA”, since that’s the title of the chapter, but it’s not particularly clear why an “analysis of variances” will help us learn anything useful about the means. In fact, this is one of the biggest conceptual difficulties that people have when first encountering ANOVA. To see how this works, I find it most helpful to start by talking about variances. In fact, what I’m going to do is start by playing some mathematical games with the formula that describes the variance. That is, we’ll start out by playing around with variances, and it will turn out that this gives us a useful tool for investigating means.

14.2.1 Two formulas for the variance of

Firstly, let’s start by introducing some notation. We’ll use to refer to the total number of groups. For our data set, there are three drugs, so there are groups. Next, we’ll use to refer to the total sample size: there are a total of people in our data set. Similarly, let’s use to denote the number of people in the -th group. In our fake clinical trial, the sample size is for all three groups.202 Finally, we’ll use to denote the outcome variable: in our case, refers to mood change. Specifically, we’ll use to refer to the mood change experienced by the -th member of the -th group. Similarly, we’ll use to be the average mood change, taken across all 18 people in the experiment, and to refer to the average mood change experienced by the 6 people in group .

Excellent. Now that we’ve got our notation sorted out, we can start writing down formulas. To start with, let’s recall the formula for the variance that we used in Section 5.2, way back in those kinder days when we were just doing descriptive statistics. The sample variance of is defined as follows: This formula looks pretty much identical to the formula for the variance in Section 5.2. The only difference is that this time around I’ve got two summations here: I’m summing over groups (i.e., values for ) and over the people within the groups (i.e., values for ). This is purely a cosmetic detail: if I’d instead used the notation to refer to the value of the outcome variable for person in the sample, then I’d only have a single summation. The only reason that we have a double summation here is that I’ve classified people into groups, and then assigned numbers to people within groups.

A concrete example might be useful here. Let’s consider this table, in which we have a total of people sorted into groups. Arbitrarily, let’s say that the “cool” people are group 1, and the “uncool” people are group 2, and it turns out that we have three cool people () and two uncool people ().

| name | person () | group | group num () | index in group () | grumpiness ( or ) |

|---|---|---|---|---|---|

| Ann | 1 | cool | 1 | 1 | 20 |

| Ben | 2 | cool | 1 | 2 | 55 |

| Cat | 3 | cool | 1 | 3 | 21 |

| Dan | 4 | uncool | 2 | 1 | 91 |

| Egg | 5 | uncool | 2 | 2 | 22 |

Notice that I’ve constructed two different labelling schemes here. We have a “person” variable , so it would be perfectly sensible to refer to as the grumpiness of the -th person in the sample. For instance, the table shows that Dan is the four so we’d say . So, when talking about the grumpiness of this “Dan” person, whoever he might be, we could refer to his grumpiness by saying that , for person that is. However, that’s not the only way we could refer to Dan. As an alternative we could note that Dan belongs to the “uncool” group (), and is in fact the first person listed in the uncool group (). So it’s equally valid to refer to Dan’s grumpiness by saying that , where and . In other words, each person corresponds to a unique combination, and so the formula that I gave above is actually identical to our original formula for the variance, which would be In both formulas, all we’re doing is summing over all of the observations in the sample. Most of the time we would just use the simpler notation: the equation using is clearly the simpler of the two. However, when doing an ANOVA it’s important to keep track of which participants belong in which groups, and we need to use the notation to do this.

14.2.2 From variances to sums of squares

Okay, now that we’ve got a good grasp on how the variance is calculated, let’s define something called the total sum of squares, which is denoted SS. This is very simple: instead of averaging the squared deviations, which is what we do when calculating the variance, we just add them up. So the formula for the total sum of squares is almost identical to the formula for the variance: When we talk about analysing variances in the context of ANOVA, what we’re really doing is working with the total sums of squares rather than the actual variance. One very nice thing about the total sum of squares is that we can break it up into two different kinds of variation. Firstly, we can talk about the within-group sum of squares, in which we look to see how different each individual person is from their own group mean: where is a group mean. In our example, would be the average mood change experienced by those people given the -th drug. So, instead of comparing individuals to the average of all people in the experiment, we’re only comparing them to those people in the the same group. As a consequence, you’d expect the value of to be smaller than the total sum of squares, because it’s completely ignoring any group differences – that is, the fact that the drugs (if they work) will have different effects on people’s moods.

Next, we can define a third notion of variation which captures only the differences between groups. We do this by looking at the differences between the group means and grand mean . In order to quantify the extent of this variation, what we do is calculate the between-group sum of squares: It’s not too difficult to show that the total variation among people in the experiment is actually the sum of the differences between the groups and the variation inside the groups . That is: Yay.

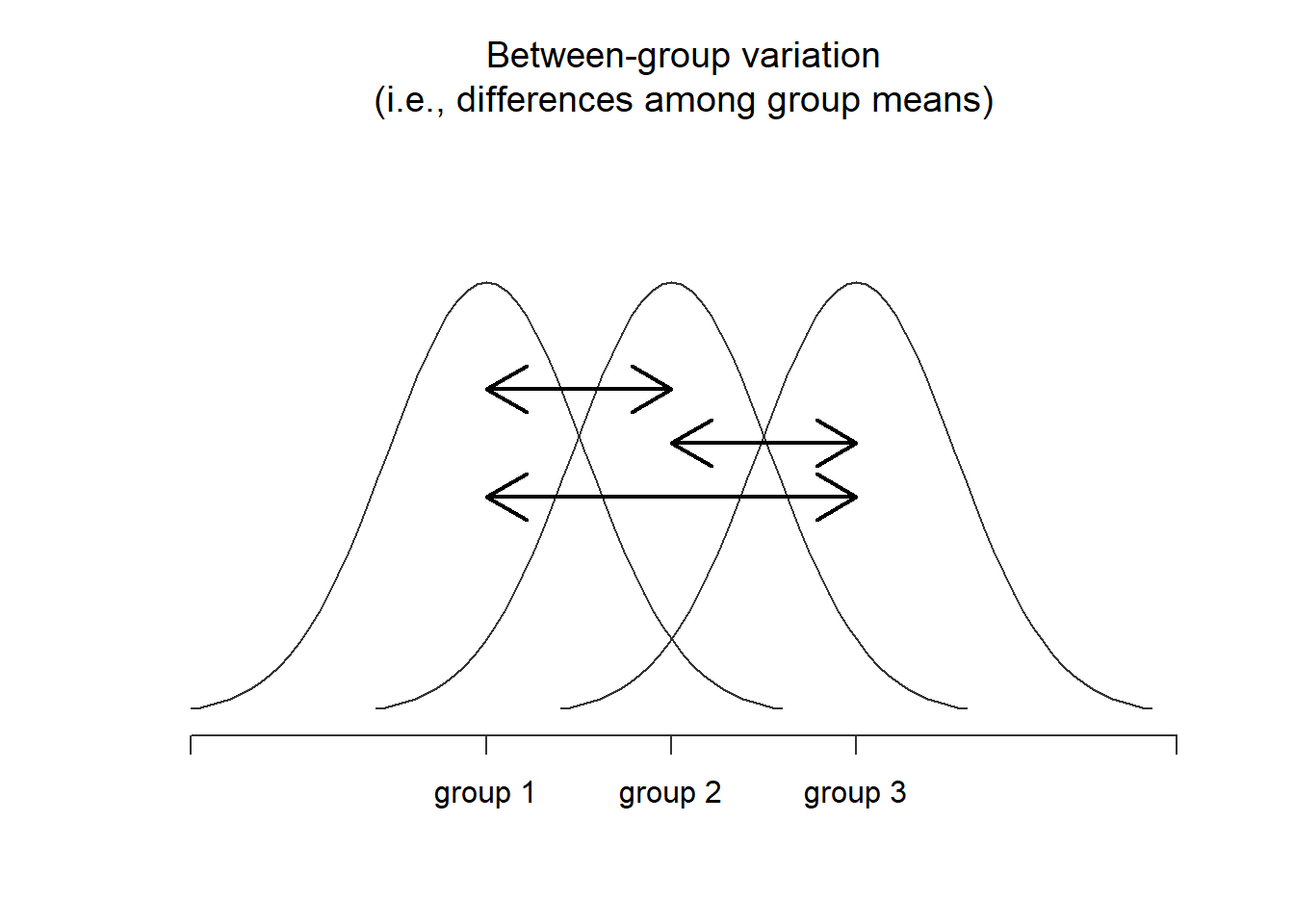

Figure 14.2: Graphical illustration of “between groups” variation

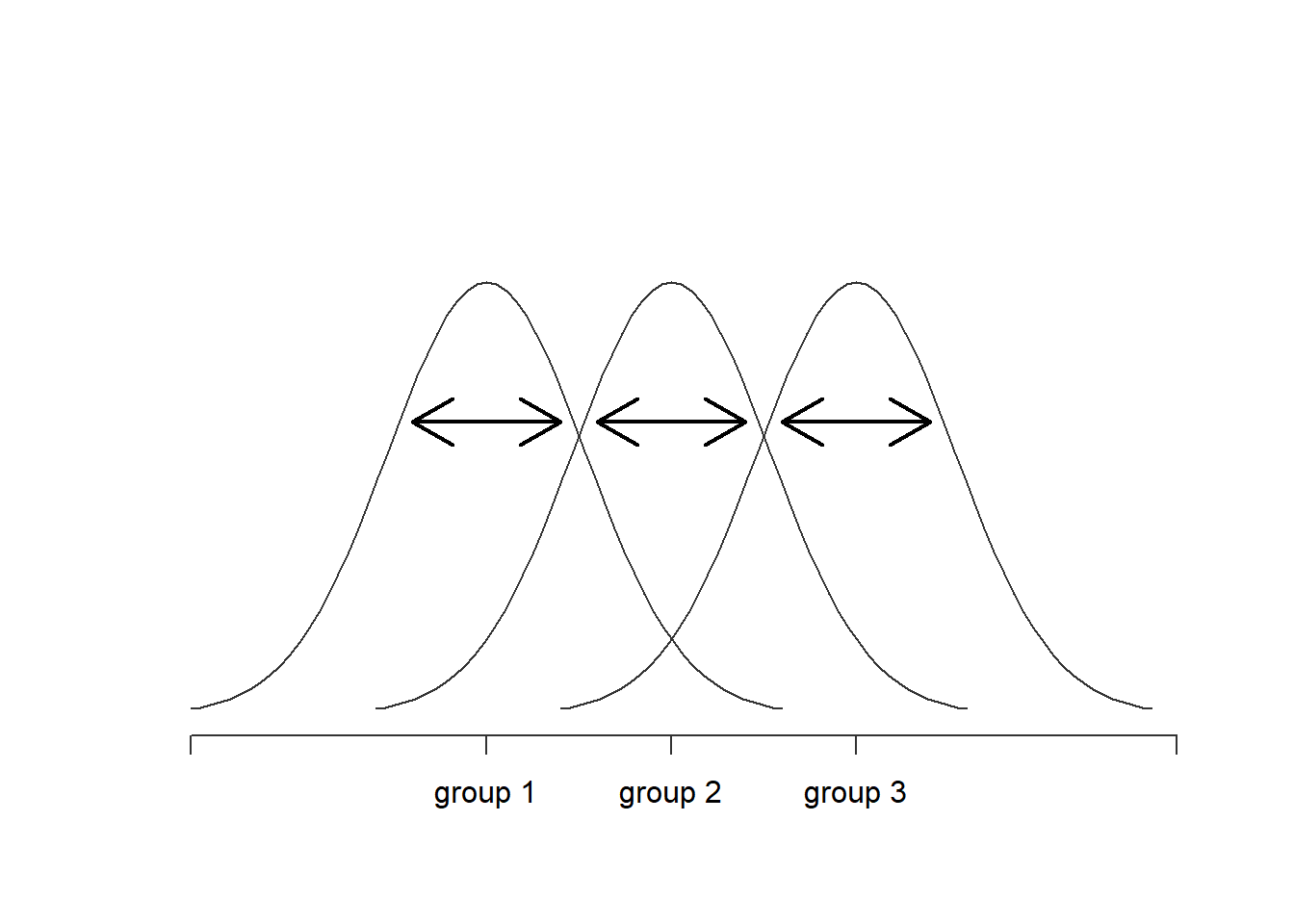

Figure 14.3: Graphical illustration of “within groups” variation

Okay, so what have we found out? We’ve discovered that the total variability associated with the outcome variable () can be mathematically carved up into the sum of “the variation due to the differences in the sample means for the different groups” () plus “all the rest of the variation” (). How does that help me find out whether the groups have different population means? Um. Wait. Hold on a second… now that I think about it, this is exactly what we were looking for. If the null hypothesis is true, then you’d expect all the sample means to be pretty similar to each other, right? And that would imply that you’d expect to be really small, or at least you’d expect it to be a lot smaller than the “the variation associated with everything else”, . Hm. I detect a hypothesis test coming on…

14.2.3 From sums of squares to the -test

As we saw in the last section, the qualitative idea behind ANOVA is to compare the two sums of squares values and to each other: if the between-group variation is is large relative to the within-group variation then we have reason to suspect that the population means for the different groups aren’t identical to each other. In order to convert this into a workable hypothesis test, there’s a little bit of “fiddling around” needed. What I’ll do is first show you what we do to calculate our test statistic – which is called an ratio – and then try to give you a feel for why we do it this way.

In order to convert our SS values into an -ratio, the first thing we need to calculate is the degrees of freedom associated with the SS and SS values. As usual, the degrees of freedom corresponds to the number of unique “data points” that contribute to a particular calculation, minus the number of “constraints” that they need to satisfy. For the within-groups variability, what we’re calculating is the variation of the individual observations ( data points) around the group means ( constraints). In contrast, for the between groups variability, we’re interested in the variation of the group means ( data points) around the grand mean (1 constraint). Therefore, the degrees of freedom here are: Okay, that seems simple enough. What we do next is convert our summed squares value into a “mean squares” value, which we do by dividing by the degrees of freedom: Finally, we calculate the -ratio by dividing the between-groups MS by the within-groups MS: At a very general level, the intuition behind the statistic is straightforward: bigger values of means that the between-groups variation is large, relative to the within-groups variation. As a consequence, the larger the value of , the more evidence we have against the null hypothesis. But how large does have to be in order to actually reject ? In order to understand this, you need a slightly deeper understanding of what ANOVA is and what the mean squares values actually are.

The next section discusses that in a bit of detail, but for readers that aren’t interested in the details of what the test is actually measuring, I’ll cut to the chase. In order to complete our hypothesis test, we need to know the sampling distribution for if the null hypothesis is true. Not surprisingly, the sampling distribution for the statistic under the null hypothesis is an distribution. If you recall back to our discussion of the distribution in Chapter @ref(probability, the distribution has two parameters, corresponding to the two degrees of freedom involved: the first one df is the between groups degrees of freedom df, and the second one df is the within groups degrees of freedom df.

A summary of all the key quantities involved in a one-way ANOVA, including the formulas showing how they are calculated, is shown in Table 14.1.

| df | sum of squares | mean squares | statistic | value | |

|---|---|---|---|---|---|

| between groups | SS | [complicated] | |||

| within groups | SS | - | - |

14.2.4 The model for the data and the meaning of (advanced)

At a fundamental level, ANOVA is a competition between two different statistical models, and . When I described the null and alternative hypotheses at the start of the section, I was a little imprecise about what these models actually are. I’ll remedy that now, though you probably won’t like me for doing so. If you recall, our null hypothesis was that all of the group means are identical to one another. If so, then a natural way to think about the outcome variable is to describe individual scores in terms of a single population mean , plus the deviation from that population mean. This deviation is usually denoted and is traditionally called the error or residual associated with that observation. Be careful though: just like we saw with the word “significant”, the word “error” has a technical meaning in statistics that isn’t quite the same as its everyday English definition. In everyday language, “error” implies a mistake of some kind; in statistics, it doesn’t (or at least, not necessarily). With that in mind, the word “residual” is a better term than the word “error”. In statistics, both words mean “leftover variability”: that is, “stuff” that the model can’t explain. In any case, here’s what the null hypothesis looks like when we write it as a statistical model: where we make the assumption (discussed later) that the residual values are normally distributed, with mean 0 and a standard deviation that is the same for all groups. To use the notation that we introduced in Chapter 9 we would write this assumption like this:

What about the alternative hypothesis, ? The only difference between the null hypothesis and the alternative hypothesis is that we allow each group to have a different population mean. So, if we let denote the population mean for the -th group in our experiment, then the statistical model corresponding to is: where, once again, we assume that the error terms are normally distributed with mean 0 and standard deviation . That is, the alternative hypothesis also assumes that

Okay, now that we’ve described the statistical models underpinning and in more detail, it’s now pretty straightforward to say what the mean square values are measuring, and what this means for the interpretation of . I won’t bore you with the proof of this, but it turns out that the within-groups mean square, MS, can be viewed as an estimator (in the technical sense: Chapter 10 of the error variance . The between-groups mean square MS is also an estimator; but what it estimates is the error variance plus a quantity that depends on the true differences among the group means. If we call this quantity , then we can see that the -statistic is basically203

where the true value if the null hypothesis is true, and if the alternative hypothesis is true (e.g. ch. 10 Hays 1994). Therefore, at a bare minimum the value must be larger than 1 to have any chance of rejecting the null hypothesis. Note that this doesn’t mean that it’s impossible to get an -value less than 1. What it means is that, if the null hypothesis is true the sampling distribution of the ratio has a mean of 1,204 and so we need to see -values larger than 1 in order to safely reject the null.

To be a bit more precise about the sampling distribution, notice that if the null hypothesis is true, both MS and MS are estimators of the variance of the residuals . If those residuals are normally distributed, then you might suspect that the estimate of the variance of is chi-square distributed… because (as discussed in Section 9.6 that’s what a chi-square distribution is: it’s what you get when you square a bunch of normally-distributed things and add them up. And since the distribution is (again, by definition) what you get when you take the ratio between two things that are distributed… we have our sampling distribution. Obviously, I’m glossing over a whole lot of stuff when I say this, but in broad terms, this really is where our sampling distribution comes from.

14.2.5 A worked example

The previous discussion was fairly abstract, and a little on the technical side, so I think that at this point it might be useful to see a worked example. For that, let’s go back to the clinical trial data that I introduced at the start of the chapter. The descriptive statistics that we calculated at the beginning tell us our group means: an average mood gain of 0.45 for the placebo, 0.72 for Anxifree, and 1.48 for Joyzepam. With that in mind, let’s party like it’s 1899205 and start doing some pencil and paper calculations. I’ll only do this for the first 5 observations, because it’s not bloody 1899 and I’m very lazy. Let’s start by calculating , the within-group sums of squares. First, let’s draw up a nice table to help us with our calculations…

| group () | outcome () |

|---|---|

| placebo | 0.5 |

| placebo | 0.3 |

| placebo | 0.1 |

| anxifree | 0.6 |

| anxifree | 0.4 |

At this stage, the only thing I’ve included in the table is the raw data itself: that is, the grouping variable (i.e., drug) and outcome variable (i.e. mood.gain) for each person. Note that the outcome variable here corresponds to the value in our equation previously. The next step in the calculation is to write down, for each person in the study, the corresponding group mean; that is, . This is slightly repetitive, but not particularly difficult since we already calculated those group means when doing our descriptive statistics:

| group () | outcome () | group mean () |

|---|---|---|

| placebo | 0.5 | 0.45 |

| placebo | 0.3 | 0.45 |

| placebo | 0.1 | 0.45 |

| anxifree | 0.6 | 0.72 |

| anxifree | 0.4 | 0.72 |

Now that we’ve written those down, we need to calculate – again for every person – the deviation from the corresponding group mean. That is, we want to subtract . After we’ve done that, we need to square everything. When we do that, here’s what we get:

| group () | outcome () | group mean () | dev. from group mean () | squared deviation () |

|---|---|---|---|---|

| placebo | 0.5 | 0.45 | 0.05 | 0.0025 |

| placebo | 0.3 | 0.45 | -0.15 | 0.0225 |

| placebo | 0.1 | 0.45 | -0.35 | 0.1225 |

| anxifree | 0.6 | 0.72 | -0.12 | 0.0136 |

| anxifree | 0.4 | 0.72 | -0.32 | 0.1003 |

The last step is equally straightforward. In order to calculate the within-group sum of squares, we just add up the squared deviations across all observations:

Of course, if we actually wanted to get the right answer, we’d need to do this for all 18 observations in the data set, not just the first five. We could continue with the pencil and paper calculations if we wanted to, but it’s pretty tedious. Alternatively, it’s not too hard to get R to do it. Here’s how:

outcome <- clin.trial$mood.gain

group <- clin.trial$drug

gp.means <- tapply(outcome,group,mean)

gp.means <- gp.means[group]

dev.from.gp.means <- outcome - gp.means

squared.devs <- dev.from.gp.means ^2It might not be obvious from inspection what these commands are doing: as a general rule, the human brain seems to just shut down when faced with a big block of programming. However, I strongly suggest that – if you’re like me and tend to find that the mere sight of this code makes you want to look away and see if there’s any beer left in the fridge or a game of footy on the telly – you take a moment and look closely at these commands one at a time. Every single one of these commands is something you’ve seen before somewhere else in the book. There’s nothing novel about them (though I’ll have to admit that the tapply() function takes a while to get a handle on), so if you’re not quite sure how these commands work, this might be a good time to try playing around with them yourself, to try to get a sense of what’s happening. On the other hand, if this does seem to make sense, then you won’t be all that surprised at what happens when I wrap these variables in a data frame, and print it out…

Y <- data.frame( group, outcome, gp.means,

dev.from.gp.means, squared.devs )

print(Y, digits = 2)## group outcome gp.means dev.from.gp.means squared.devs

## 1 placebo 0.5 0.45 0.050 0.0025

## 2 placebo 0.3 0.45 -0.150 0.0225

## 3 placebo 0.1 0.45 -0.350 0.1225

## 4 anxifree 0.6 0.72 -0.117 0.0136

## 5 anxifree 0.4 0.72 -0.317 0.1003

## 6 anxifree 0.2 0.72 -0.517 0.2669

## 7 joyzepam 1.4 1.48 -0.083 0.0069

## 8 joyzepam 1.7 1.48 0.217 0.0469

## 9 joyzepam 1.3 1.48 -0.183 0.0336

## 10 placebo 0.6 0.45 0.150 0.0225

## 11 placebo 0.9 0.45 0.450 0.2025

## 12 placebo 0.3 0.45 -0.150 0.0225

## 13 anxifree 1.1 0.72 0.383 0.1469

## 14 anxifree 0.8 0.72 0.083 0.0069

## 15 anxifree 1.2 0.72 0.483 0.2336

## 16 joyzepam 1.8 1.48 0.317 0.1003

## 17 joyzepam 1.3 1.48 -0.183 0.0336

## 18 joyzepam 1.4 1.48 -0.083 0.0069If you compare this output to the contents of the table I’ve been constructing by hand, you can see that R has done exactly the same calculations that I was doing, and much faster too. So, if we want to finish the calculations of the within-group sum of squares in R, we just ask for the sum() of the squared.devs variable:

SSw <- sum( squared.devs )

print( SSw )## [1] 1.391667Obviously, this isn’t the same as what I calculated, because R used all 18 observations. But if I’d typed sum( squared.devs[1:5] ) instead, it would have given the same answer that I got earlier.

Okay. Now that we’ve calculated the within groups variation, , it’s time to turn our attention to the between-group sum of squares, . The calculations for this case are very similar. The main difference is that, instead of calculating the differences between an observation and a group mean for all of the observations, we calculate the differences between the group means and the grand mean (in this case 0.88) for all of the groups…

| group () | group mean () | grand mean () | deviation () | squared deviations () |

|---|---|---|---|---|

| placebo | 0.45 | 0.88 | -0.43 | 0.18 |

| anxifree | 0.72 | 0.88 | -0.16 | 0.03 |

| joyzepam | 1.48 | 0.88 | 0.60 | 0.36 |

However, for the between group calculations we need to multiply each of these squared deviations by , the number of observations in the group. We do this because every observation in the group (all of them) is associated with a between group difference. So if there are six people in the placebo group, and the placebo group mean differs from the grand mean by 0.19, then the total between group variation associated with these six people is . So we have to extend our little table of calculations…

| group () | squared deviations () | sample size () | weighted squared dev () |

|---|---|---|---|

| placebo | 0.18 | 6 | 1.11 |

| anxifree | 0.03 | 6 | 0.16 |

| joyzepam | 0.36 | 6 | 2.18 |

And so now our between group sum of squares is obtained by summing these “weighted squared deviations” over all three groups in the study: As you can see, the between group calculations are a lot shorter, so you probably wouldn’t usually want to bother using R as your calculator. However, if you did decide to do so, here’s one way you could do it:

gp.means <- tapply(outcome,group,mean)

grand.mean <- mean(outcome)

dev.from.grand.mean <- gp.means - grand.mean

squared.devs <- dev.from.grand.mean ^2

gp.sizes <- tapply(outcome,group,length)

wt.squared.devs <- gp.sizes * squared.devsAgain, I won’t actually try to explain this code line by line, but – just like last time – there’s nothing in there that we haven’t seen in several places elsewhere in the book, so I’ll leave it as an exercise for you to make sure you understand it. Once again, we can dump all our variables into a data frame so that we can print it out as a nice table:

Y <- data.frame( gp.means, grand.mean, dev.from.grand.mean,

squared.devs, gp.sizes, wt.squared.devs )

print(Y, digits = 2)## gp.means grand.mean dev.from.grand.mean squared.devs gp.sizes

## placebo 0.45 0.88 -0.43 0.188 6

## anxifree 0.72 0.88 -0.17 0.028 6

## joyzepam 1.48 0.88 0.60 0.360 6

## wt.squared.devs

## placebo 1.13

## anxifree 0.17

## joyzepam 2.16Clearly, these are basically the same numbers that we got before. There are a few tiny differences, but that’s only because the hand-calculated versions have some small errors caused by the fact that I rounded all my numbers to 2 decimal places at each step in the calculations, whereas R only does it at the end (obviously, R s version is more accurate). Anyway, here’s the R command showing the final step:

SSb <- sum( wt.squared.devs )

print( SSb )## [1] 3.453333which is (ignoring the slight differences due to rounding error) the same answer that I got when doing things by hand.

Now that we’ve calculated our sums of squares values, and , the rest of the ANOVA is pretty painless. The next step is to calculate the degrees of freedom. Since we have groups and observations in total, our degrees of freedom can be calculated by simple subtraction: Next, since we’ve now calculated the values for the sums of squares and the degrees of freedom, for both the within-groups variability and the between-groups variability, we can obtain the mean square values by dividing one by the other: We’re almost done. The mean square values can be used to calculate the -value, which is the test statistic that we’re interested in. We do this by dividing the between-groups MS value by the and within-groups MS value. Woohooo! This is terribly exciting, yes? Now that we have our test statistic, the last step is to find out whether the test itself gives us a significant result. As discussed in Chapter @ref(hypothesistesting, what we really ought to do is choose an level (i.e., acceptable Type I error rate) ahead of time, construct our rejection region, etc etc. But in practice it’s just easier to directly calculate the -value. Back in the “old days”, what we’d do is open up a statistics textbook or something and flick to the back section which would actually have a huge lookup table… that’s how we’d “compute” our -value, because it’s too much effort to do it any other way. However, since we have access to R, I’ll use the pf() function to do it instead. Now, remember that I explained earlier that the -test is always one sided? And that we only reject the null hypothesis for very large -values? That means we’re only interested in the upper tail of the -distribution. The command that you’d use here would be this…

pf( 18.6, df1 = 2, df2 = 15, lower.tail = FALSE)## [1] 8.672727e-05Therefore, our -value comes to 0.0000867, or in scientific notation. So, unless we’re being extremely conservative about our Type I error rate, we’re pretty much guaranteed to reject the null hypothesis.

At this point, we’re basically done. Having completed our calculations, it’s traditional to organise all these numbers into an ANOVA table like the one in Table@reftab:anovatable. For our clinical trial data, the ANOVA table would look like this:

| df | sum of squares | mean squares | -statistic | -value | |

|---|---|---|---|---|---|

| between groups | 2 | 3.45 | 1.73 | 18.6 | |

| within groups | 15 | 1.39 | 0.09 | - | - |

These days, you’ll probably never have much reason to want to construct one of these tables yourself, but you will find that almost all statistical software (R included) tends to organise the output of an ANOVA into a table like this, so it’s a good idea to get used to reading them. However, although the software will output a full ANOVA table, there’s almost never a good reason to include the whole table in your write up. A pretty standard way of reporting this result would be to write something like this:

One-way ANOVA showed a significant effect of drug on mood gain ().

Sigh. So much work for one short sentence.

14.3 Running an ANOVA in R

I’m pretty sure I know what you’re thinking after reading the last section, especially if you followed my advice and tried typing all the commands in yourself…. doing the ANOVA calculations yourself sucks. There’s quite a lot of calculations that we needed to do along the way, and it would be tedious to have to do this over and over again every time you wanted to do an ANOVA. One possible solution to the problem would be to take all these calculations and turn them into some R functions yourself. You’d still have to do a lot of typing, but at least you’d only have to do it the one time: once you’ve created the functions, you can reuse them over and over again. However, writing your own functions is a lot of work, so this is kind of a last resort. Besides, it’s much better if someone else does all the work for you…

14.3.1 Using the aov() function to specify your ANOVA

To make life easier for you, R provides a function called aov(), which – obviously – is an acronym of “Analysis Of Variance.”206 If you type ?aov and have a look at the help documentation, you’ll see that there are several arguments to the aov() function, but the only two that we’re interested in are formula and data. As we’ve seen in a few places previously, the formula argument is what you use to specify the outcome variable and the grouping variable, and the data argument is what you use to specify the data frame that stores these variables. In other words, to do the same ANOVA that I laboriously calculated in the previous section, I’d use a command like this:

aov( formula = mood.gain ~ drug, data = clin.trial ) Actually, that’s not quite the whole story, as you’ll see as soon as you look at the output from this command, which I’ve hidden for the moment in order to avoid confusing you. Before we go into specifics, I should point out that either of these commands will do the same thing:

aov( clin.trial$mood.gain ~ clin.trial$drug )

aov( mood.gain ~ drug, clin.trial ) In the first command, I didn’t specify a data set, and instead relied on the $ operator to tell R how to find the variables. In the second command, I dropped the argument names, which is okay in this case because formula is the first argument to the aov() function, and data is the second one. Regardless of how I specify the ANOVA, I can assign the output of the aov() function to a variable, like this for example:

my.anova <- aov( mood.gain ~ drug, clin.trial ) This is almost always a good thing to do, because there’s lots of useful things that we can do with the my.anova variable. So let’s assume that it’s this last command that I used to specify the ANOVA that I’m trying to run, and as a consequence I have this my.anova variable sitting in my workspace, waiting for me to do something with it…

14.3.2 Understanding what the aov() function produces

Now that we’ve seen how to use the aov() function to create my.anova we’d better have a look at what this variable actually is. The first thing to do is to check to see what class of variable we’ve created, since it’s kind of interesting in this case. When we do that…

class( my.anova )## [1] "aov" "lm"… we discover that my.anova actually has two classes! The first class tells us that it’s an aov (analysis of variance) object, but the second tells us that it’s also an lm (linear model) object. Later on, we’ll see that this reflects a pretty deep statistical relationship between ANOVA and regression (Chapter 15 and it means that any function that exists in R for dealing with regressions can also be applied to aov objects, which is neat; but I’m getting ahead of myself. For now, I want to note that what we’ve created is an aov object, and to also make the point that aov objects are actually rather complicated beasts. I won’t be trying to explain everything about them, since it’s way beyond the scope of an introductory statistics subject, but to give you a tiny hint of some of the stuff that R stores inside an aov object, let’s ask it to print out the names() of all the stored quantities…

names( my.anova )## [1] "coefficients" "residuals" "effects" "rank"

## [5] "fitted.values" "assign" "qr" "df.residual"

## [9] "contrasts" "xlevels" "call" "terms"

## [13] "model"As we go through the rest of the book, I hope that a few of these will become a little more obvious to you, but right now that’s going to look pretty damned opaque. That’s okay. You don’t need to know any of the details about it right now, and most of it you don’t need at all… what you do need to understand is that the aov() function does a lot of calculations for you, not just the basic ones that I outlined in the previous sections. What this means is that it’s generally a good idea to create a variable like my.anova that stores the output of the aov() function… because later on, you can use my.anova as an input to lots of other functions: those other functions can pull out bits and pieces from the aov object, and calculate various other things that you might need.

Right then. The simplest thing you can do with an aov object is to print() it out. When we do that, it shows us a few of the key quantities of interest:

print( my.anova )## Call:

## aov(formula = mood.gain ~ drug, data = clin.trial)

##

## Terms:

## drug Residuals

## Sum of Squares 3.453333 1.391667

## Deg. of Freedom 2 15

##

## Residual standard error: 0.3045944

## Estimated effects may be unbalancedSpecificially, it prints out a reminder of the command that you used when you called aov() in the first place, shows you the sums of squares values, the degrees of freedom, and a couple of other quantities that we’re not really interested in right now. Notice, however, that R doesn’t use the names “between-group” and “within-group”. Instead, it tries to assign more meaningful names: in our particular example, the between groups variance corresponds to the effect that the drug has on the outcome variable; and the within groups variance is corresponds to the “leftover” variability, so it calls that the residuals. If we compare these numbers to the numbers that I calculated by hand in Section 14.2.5, you can see that they’re identical… the between groups sums of squares is , the within groups sums of squares is , and the degrees of freedom are 2 and 15 repectively.

14.3.3 Running the hypothesis tests for the ANOVA

Okay, so we’ve verified that my.anova seems to be storing a bunch of the numbers that we’re looking for, but the print() function didn’t quite give us the output that we really wanted. Where’s the -value? The -value? These are the most important numbers in our hypothesis test, but the print() function doesn’t provide them. To get those numbers, we need to use a different function. Instead of asking R to print() out the aov object, we should have asked for a summary() of it.207 When we do that…

summary( my.anova )## Df Sum Sq Mean Sq F value Pr(>F)

## drug 2 3.453 1.7267 18.61 8.65e-05 ***

## Residuals 15 1.392 0.0928

## ---

## Signif. codes: 0 '***' 0.001 '**' 0.01 '*' 0.05 '.' 0.1 ' ' 1… we get all of the key numbers that we calculated earlier. We get the sums of squares, the degrees of freedom, the mean squares, the -statistic, and the -value itself. These are all identical to the numbers that we calculated ourselves when doing it the long and tedious way, and it’s even organised into the same kind of ANOVA table that I showed in Table 14.1, and then filled out by hand in Section 14.2.5. The only things that are even slightly different is that some of the row and column names are a bit different.

14.4 Effect size

There’s a few different ways you could measure the effect size in an ANOVA, but the most commonly used measures are (eta squared) and partial . For a one way analysis of variance they’re identical to each other, so for the moment I’ll just explain . The definition of is actually really simple: That’s all it is. So when I look at the ANOVA table above, I see that and . Thus we get an value of The interpretation of is equally straightforward: it refers to the proportion of the variability in the outcome variable (mood.gain) that can be explained in terms of the predictor (drug). A value of means that there is no relationship at all between the two, whereas a value of means that the relationship is perfect. Better yet, the value is very closely related to a squared correlation (i.e., ). So, if you’re trying to figure out whether a particular value of is big or small, it’s sometimes useful to remember that can be interpreted as if it referred to the magnitude of a Pearson correlation. So in our drugs example, the value of .71 corresponds to an value of . If we think about this as being equivalent to a correlation of about .84, we’d conclude that the relationship between drug and mood.gain is strong.

The core packages in R don’t include any functions for calculating . However, it’s pretty straightforward to calculate it directly from the numbers in the ANOVA table. In fact, since I’ve already got the SSw and SSb variables lying around from my earlier calculations, I can do this:

SStot <- SSb + SSw # total sums of squares

eta.squared <- SSb / SStot # eta-squared value

print( eta.squared )## [1] 0.7127623However, since it can be tedious to do this the long way (especially when we start running more complicated ANOVAs, such as those in Chapter 16 I’ve included an etaSquared() function in the lsr package which will do it for you. For now, the only argument you need to care about is x, which should be the aov object corresponding to your ANOVA. When we do this, what we get as output is this:

etaSquared( x = my.anova )## eta.sq eta.sq.part

## drug 0.7127623 0.7127623The output here shows two different numbers. The first one corresponds to the statistic, precisely as described above. The second one refers to “partial ”, which is a somewhat different measure of effect size that I’ll describe later. For the simple ANOVA that we’ve just run, they’re the same number. But this won’t always be true once we start running more complicated ANOVAs.208

14.5 Multiple comparisons and post hoc tests

Any time you run an ANOVA with more than two groups, and you end up with a significant effect, the first thing you’ll probably want to ask is which groups are actually different from one another. In our drugs example, our null hypothesis was that all three drugs (placebo, Anxifree and Joyzepam) have the exact same effect on mood. But if you think about it, the null hypothesis is actually claiming three different things all at once here. Specifically, it claims that:

- Your competitor’s drug (Anxifree) is no better than a placebo (i.e., )

- Your drug (Joyzepam) is no better than a placebo (i.e., )

- Anxifree and Joyzepam are equally effective (i.e., )

If any one of those three claims is false, then the null hypothesis is also false. So, now that we’ve rejected our null hypothesis, we’re thinking that at least one of those things isn’t true. But which ones? All three of these propositions are of interest: you certainly want to know if your new drug Joyzepam is better than a placebo, and it would be nice to know how well it stacks up against an existing commercial alternative (i.e., Anxifree). It would even be useful to check the performance of Anxifree against the placebo: even if Anxifree has already been extensively tested against placebos by other researchers, it can still be very useful to check that your study is producing similar results to earlier work.

When we characterise the null hypothesis in terms of these three distinct propositions, it becomes clear that there are eight possible “states of the world” that we need to distinguish between:

| possibility: | is ? | is ? | is ? | which hypothesis? |

|---|---|---|---|---|

| 1 | null | |||

| 2 | alternative | |||

| 3 | alternative | |||

| 4 | alternative | |||

| 5 | alternative | |||

| 6 | alternative | |||

| 7 | alternative | |||

| 8 | alternative |

By rejecting the null hypothesis, we’ve decided that we don’t believe that #1 is the true state of the world. The next question to ask is, which of the other seven possibilities do we think is right? When faced with this situation, its usually helps to look at the data. For instance, if we look at the plots in Figure 14.1, it’s tempting to conclude that Joyzepam is better than the placebo and better than Anxifree, but there’s no real difference between Anxifree and the placebo. However, if we want to get a clearer answer about this, it might help to run some tests.

14.5.1 Running “pairwise” -tests

How might we go about solving our problem? Given that we’ve got three separate pairs of means (placebo versus Anxifree, placebo versus Joyzepam, and Anxifree versus Joyzepam) to compare, what we could do is run three separate -tests and see what happens. There’s a couple of ways that we could do this. One method would be to construct new variables corresponding the groups you want to compare (e.g., anxifree, placebo and joyzepam), and then run a -test on these new variables:

t.test( anxifree, placebo, var.equal = TRUE ) # Student t-test

anxifree <- with(clin.trial, mood.gain[drug == "anxifree"]) # mood change due to anxifree

placebo <- with(clin.trial, mood.gain[drug == "placebo"]) # mood change due to placebo or, you could use the subset argument in the t.test() function to select only those observations corresponding to one of the two groups we’re interested in:

t.test( formula = mood.gain ~ drug,

data = clin.trial,

subset = drug %in% c("placebo","anxifree"),

var.equal = TRUE

)See Chapter 7 if you’ve forgotten how the %in% operator works. Regardless of which version we do, R will print out the results of the -test, though I haven’t included that output here. If we go on to do this for all possible pairs of variables, we can look to see which (if any) pairs of groups are significantly different to each other. This “lots of -tests idea” isn’t a bad strategy, though as we’ll see later on there are some problems with it. However, for the moment our bigger problem is that it’s a pain to have to type in such a long command over and over again: for instance, if your experiment has 10 groups, then you have to run 45 -tests. That’s way too much typing.

To help keep the typing to a minimum, R provides a function called pairwise.t.test() that automatically runs all of the -tests for you. There are three arguments that you need to specify, the outcome variable x, the group variable g, and the p.adjust.method argument, which “adjusts” the -value in one way or another. I’ll explain -value adjustment in a moment, but for now we can just set p.adjust.method = "none" since we’re not doing any adjustments. For our example, here’s what we do:

pairwise.t.test( x = clin.trial$mood.gain, # outcome variable

g = clin.trial$drug, # grouping variable

p.adjust.method = "none" # which correction to use?

)##

## Pairwise comparisons using t tests with pooled SD

##

## data: clin.trial$mood.gain and clin.trial$drug

##

## placebo anxifree

## anxifree 0.15021 -

## joyzepam 3e-05 0.00056

##

## P value adjustment method: noneOne thing that bugs me slightly about the pairwise.t.test() function is that you can’t just give it an aov object, and have it produce this output. After all, I went to all that trouble earlier of getting R to create the my.anova variable and – as we saw in Section 14.3.2 – R has actually stored enough information inside it that I should just be able to get it to run all the pairwise tests using my.anova as an input. To that end, I’ve included a posthocPairwiseT() function in the lsr package that lets you do this. The idea behind this function is that you can just input the aov object itself,209 and then get the pairwise tests as an output. As of the current writing, posthocPairwiseT() is actually just a simple way of calling pairwise.t.test() function, but you should be aware that I intend to make some changes to it later on. Here’s an example:

posthocPairwiseT( x = my.anova, p.adjust.method = "none" )##

## Pairwise comparisons using t tests with pooled SD

##

## data: mood.gain and drug

##

## placebo anxifree

## anxifree 0.15021 -

## joyzepam 3e-05 0.00056

##

## P value adjustment method: noneIn later versions, I plan to add more functionality (e.g., adjusted confidence intervals), but for now I think it’s at least kind of useful. To see why, let’s suppose you’ve run your ANOVA and stored the results in my.anova, and you’re happy using the Holm correction (the default method in pairwise.t.test(), which I’ll explain this in a moment). In that case, all you have to do is type this:

posthocPairwiseT( my.anova )and R will output the test results. Much more convenient, I think.

14.5.2 Corrections for multiple testing

In the previous section I hinted that there’s a problem with just running lots and lots of -tests. The concern is that when running these analyses, what we’re doing is going on a “fishing expedition”: we’re running lots and lots of tests without much theoretical guidance, in the hope that some of them come up significant. This kind of theory-free search for group differences is referred to as post hoc analysis (“post hoc” being Latin for “after this”).210

It’s okay to run post hoc analyses, but a lot of care is required. For instance, the analysis that I ran in the previous section is actually pretty dangerous: each individual -test is designed to have a 5% Type I error rate (i.e., ), and I ran three of these tests. Imagine what would have happened if my ANOVA involved 10 different groups, and I had decided to run 45 “post hoc” -tests to try to find out which ones were significantly different from each other, you’d expect 2 or 3 of them to come up significant by chance alone. As we saw in Chapter 11, the central organising principle behind null hypothesis testing is that we seek to control our Type I error rate, but now that I’m running lots of -tests at once, in order to determine the source of my ANOVA results, my actual Type I error rate across this whole family of tests has gotten completely out of control.

The usual solution to this problem is to introduce an adjustment to the -value, which aims to control the total error rate across the family of tests (see Shaffer 1995). An adjustment of this form, which is usually (but not always) applied because one is doing post hoc analysis, is often referred to as a correction for multiple comparisons, though it is sometimes referred to as “simultaneous inference”. In any case, there are quite a few different ways of doing this adjustment. I’ll discuss a few of them in this section and in Section 16.8, but you should be aware that there are many other methods out there (see, e.g., Hsu 1996).

14.5.3 Bonferroni corrections

The simplest of these adjustments is called the Bonferroni correction (Dunn 1961), and it’s very very simple indeed. Suppose that my post hoc analysis consists of separate tests, and I want to ensure that the total probability of making any Type I errors at all is at most .211 If so, then the Bonferroni correction just says “multiply all your raw -values by ”. If we let denote the original -value, and let be the corrected value, then the Bonferroni correction tells that: And therefore, if you’re using the Bonferroni correction, you would reject the null hypothesis if . The logic behind this correction is very straightforward. We’re doing different tests; so if we arrange it so that each test has a Type I error rate of at most , then the total Type I error rate across these tests cannot be larger than . That’s pretty simple, so much so that in the original paper, the author writes:

The method given here is so simple and so general that I am sure it must have been used before this. I do not find it, however, so can only conclude that perhaps its very simplicity has kept statisticians from realizing that it is a very good method in some situations (pp 52-53 Dunn 1961)

To use the Bonferroni correction in R, you can use the pairwise.t.test() function,212 making sure that you set p.adjust.method = "bonferroni". Alternatively, since the whole reason why we’re doing these pairwise tests in the first place is because we have an ANOVA that we’re trying to understand, it’s probably more convenient to use the posthocPairwiseT() function in the lsr package, since we can use my.anova as the input:

posthocPairwiseT( my.anova, p.adjust.method = "bonferroni")##

## Pairwise comparisons using t tests with pooled SD

##

## data: mood.gain and drug

##

## placebo anxifree

## anxifree 0.4506 -

## joyzepam 9.1e-05 0.0017

##

## P value adjustment method: bonferroniIf we compare these three -values to those that we saw in the previous section when we made no adjustment at all, it is clear that the only thing that R has done is multiply them by 3.

14.5.4 Holm corrections

Although the Bonferroni correction is the simplest adjustment out there, it’s not usually the best one to use. One method that is often used instead is the Holm correction (Holm 1979). The idea behind the Holm correction is to pretend that you’re doing the tests sequentially; starting with the smallest (raw) -value and moving onto the largest one. For the -th largest of the -values, the adjustment is either (i.e., the biggest -value remains unchanged, the second biggest -value is doubled, the third biggest -value is tripled, and so on), or whichever one is larger. This might sound a little confusing, so let’s go through it a little more slowly. Here’s what the Holm correction does. First, you sort all of your -values in order, from smallest to largest. For the smallest -value all you do is multiply it by , and you’re done. However, for all the other ones it’s a two-stage process. For instance, when you move to the second smallest value, you first multiply it by . If this produces a number that is bigger than the adjusted -value that you got last time, then you keep it. But if it’s smaller than the last one, then you copy the last -value. To illustrate how this works, consider the table below, which shows the calculations of a Holm correction for a collection of five -values:

| raw | rank | Holm | |

|---|---|---|---|

| .001 | 5 | .005 | .005 |

| .005 | 4 | .020 | .020 |

| .019 | 3 | .057 | .057 |

| .022 | 2 | .044 | .057 |

| .103 | 1 | .103 | .103 |

Hopefully that makes things clear.

Although it’s a little harder to calculate, the Holm correction has some very nice properties: it’s more powerful than Bonferroni (i.e., it has a lower Type II error rate), but – counterintuitive as it might seem – it has the same Type I error rate. As a consequence, in practice there’s never any reason to use the simpler Bonferroni correction, since it is always outperformed by the slightly more elaborate Holm correction. Because of this, the Holm correction is the default one used by pairwise.t.test() and posthocPairwiseT(). To run the Holm correction in R, you could specify p.adjust.method = "Holm" if you wanted to, but since it’s the default you can just to do this:

posthocPairwiseT( my.anova )##

## Pairwise comparisons using t tests with pooled SD

##

## data: mood.gain and drug

##

## placebo anxifree

## anxifree 0.1502 -

## joyzepam 9.1e-05 0.0011

##

## P value adjustment method: holmAs you can see, the biggest -value (corresponding to the comparison between Anxifree and the placebo) is unaltered: at a value of , it is exactly the same as the value we got originally when we applied no correction at all. In contrast, the smallest -value (Joyzepam versus placebo) has been multiplied by three.

14.5.5 Writing up the post hoc test

Finally, having run the post hoc analysis to determine which groups are significantly different to one another, you might write up the result like this:

Post hoc tests (using the Holm correction to adjust ) indicated that Joyzepam produced a significantly larger mood change than both Anxifree () and the placebo (). We found no evidence that Anxifree performed better than the placebo ().

Or, if you don’t like the idea of reporting exact -values, then you’d change those numbers to , and respectively. Either way, the key thing is that you indicate that you used Holm’s correction to adjust the -values. And of course, I’m assuming that elsewhere in the write up you’ve included the relevant descriptive statistics (i.e., the group means and standard deviations), since these -values on their own aren’t terribly informative.

14.6 Assumptions of one-way ANOVA

Like any statistical test, analysis of variance relies on some assumptions about the data. There are three key assumptions that you need to be aware of: normality, homogeneity of variance and independence. If you remember back to Section 14.2.4 – which I hope you at least skimmed even if you didn’t read the whole thing – I described the statistical models underpinning ANOVA, which I wrote down like this: In these equations refers to a single, grand population mean which is the same for all groups, and is the population mean for the -th group. Up to this point we’ve been mostly interested in whether our data are best described in terms of a single grand mean (the null hypothesis) or in terms of different group-specific means (the alternative hypothesis). This makes sense, of course: that’s actually the important research question! However, all of our testing procedures have – implicitly – relied on a specific assumption about the residuals, , namely that None of the maths works properly without this bit. Or, to be precise, you can still do all the calculations, and you’ll end up with an -statistic, but you have no guarantee that this -statistic actually measures what you think it’s measuring, and so any conclusions that you might draw on the basis of the test might be wrong.

So, how do we check whether this assumption about the residuals is accurate? Well, as I indicated above, there are three distinct claims buried in this one statement, and we’ll consider them separately.

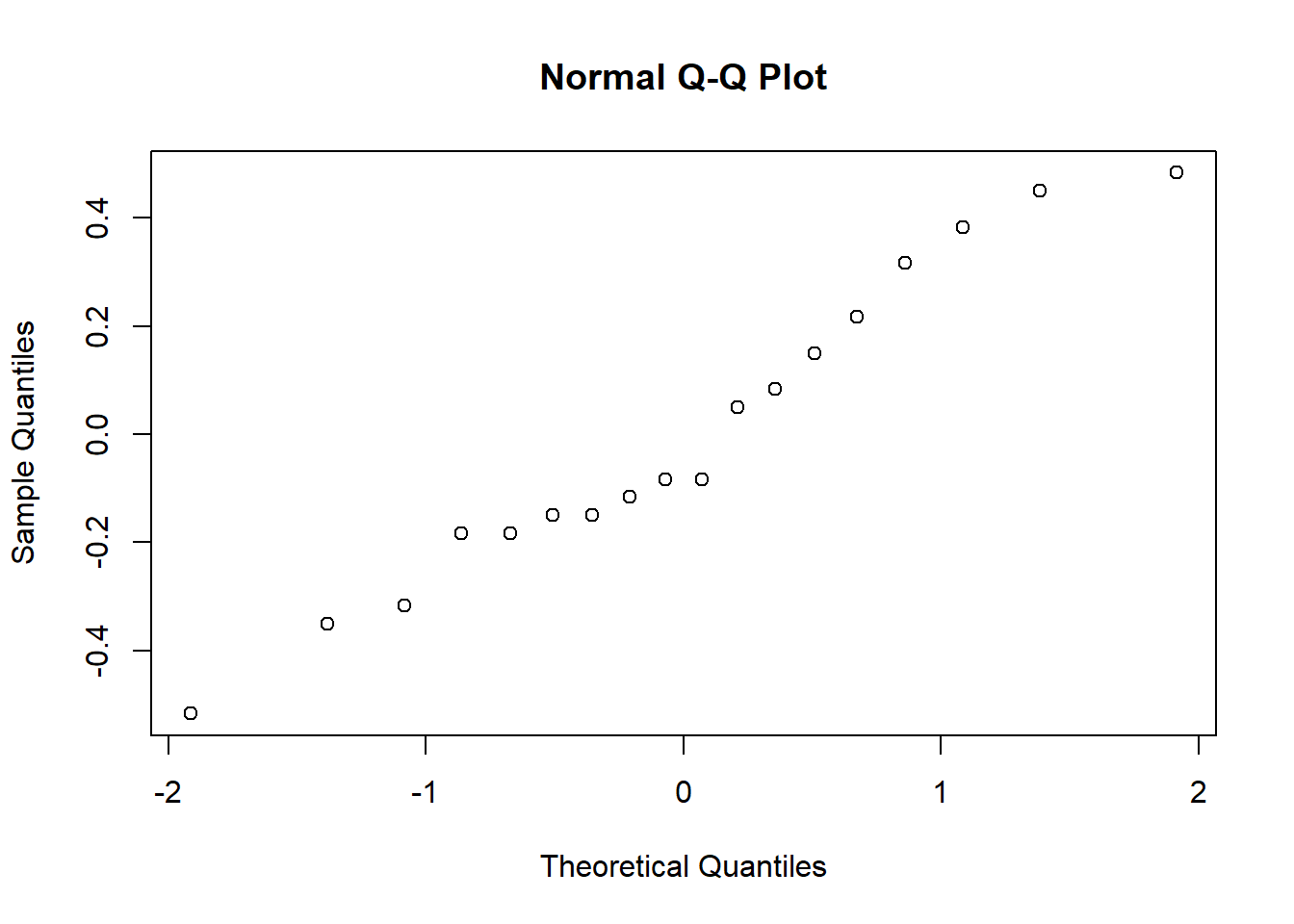

- Normality. The residuals are assumed to be normally distributed. As we saw in Section 13.9, we can assess this by looking at QQ plots or running a Shapiro-Wilk test. I’ll talk about this in an ANOVA context in Section 14.9.

- Homogeneity of variance. Notice that we’ve only got the one value for the population standard deviation (i.e., ), rather than allowing each group to have it’s own value (i.e., ). This is referred to as the homogeneity of variance (sometimes called homoscedasticity) assumption. ANOVA assumes that the population standard deviation is the same for all groups. We’ll talk about this extensively in Section 14.7.

- Independence. The independence assumption is a little trickier. What it basically means is that, knowing one residual tells you nothing about any other residual. All of the values are assumed to have been generated without any “regard for” or “relationship to” any of the other ones. There’s not an obvious or simple way to test for this, but there are some situations that are clear violations of this: for instance, if you have a repeated-measures design, where each participant in your study appears in more than one condition, then independence doesn’t hold; there’s a special relationship between some observations… namely those that correspond to the same person! When that happens, you need to use something like repeated measures ANOVA. I don’t currently talk about repeated measures ANOVA in this book, but it will be included in later versions.

14.6.1 How robust is ANOVA?

One question that people often want to know the answer to is the extent to which you can trust the results of an ANOVA if the assumptions are violated. Or, to use the technical language, how robust is ANOVA to violations of the assumptions. Due to deadline constraints I don’t have the time to discuss this topic. This is a topic I’ll cover in some detail in a later version of the book.

14.7 Checking the homogeneity of variance assumption

There’s more than one way to skin a cat, as the saying goes, and more than one way to test the homogeneity of variance assumption, too (though for some reason no-one made a saying out of that). The most commonly used test for this that I’ve seen in the literature is the Levene test (Levene 1960), and the closely related Brown-Forsythe test (Brown and Forsythe 1974), both of which I’ll describe here. Alternatively, you could use the Bartlett test, which is implemented in R via the bartlett.test() function, but I’ll leave it as an exercise for the reader to go check that one out if you’re interested.

Levene’s test is shockingly simple. Suppose we have our outcome variable . All we do is define a new variable, which I’ll call , corresponding to the absolute deviation from the group mean: Okay, what good does this do us? Well, let’s take a moment to think about what actually is, and what we’re trying to test. The value of is a measure of how the -th observation in the -th group deviates from its group mean. And our null hypothesis is that all groups have the same variance; that is, the same overall deviations from the group means! So, the null hypothesis in a Levene’s test is that the population means of are identical for all groups. Hm. So what we need now is a statistical test of the null hypothesis that all group means are identical. Where have we seen that before? Oh right, that’s what ANOVA is… and so all that the Levene’s test does is run an ANOVA on the new variable .

What about the Brown-Forsythe test? Does that do anything particularly different? Nope. The only change from the Levene’s test is that it constructs the transformed variable in a slightly different way, using deviations from the group medians rather than deviations from the group means. That is, for the Brown-Forsythe test, where is the median for group . Regardless of whether you’re doing the standard Levene test or the Brown-Forsythe test, the test statistic – which is sometimes denoted , but sometimes written as – is calculated in exactly the same way that the -statistic for the regular ANOVA is calculated, just using a rather than . With that in mind, let’s just move on and look at how to run the test in R.

14.7.1 Running the Levene’s test in R

Okay, so how do we run the Levene test? Obviously, since the Levene test is just an ANOVA, it would be easy enough to manually create the transformed variable and then use the aov() function to run an ANOVA on that. However, that’s the tedious way to do it. A better way to do run your Levene’s test is to use the leveneTest() function, which is in the car package. As usual, we first load the package

library( car ) and now that we have, we can run our Levene test. The main argument that you need to specify is y, but you can do this in lots of different ways. Probably the simplest way to do it is actually input the original aov object. Since I’ve got the my.anova variable stored from my original ANOVA, I can just do this:

leveneTest( my.anova )## Levene's Test for Homogeneity of Variance (center = median)

## Df F value Pr(>F)

## group 2 1.4672 0.2618

## 15If we look at the output, we see that the test is non-significant , so it looks like the homogeneity of variance assumption is fine. Remember, although R reports the test statistic as an -value, it could equally be called , in which case you’d just write . Also, note the part of the output that says center = median. That’s telling you that, by default, the leveneTest() function actually does the Brown-Forsythe test. If you want to use the mean instead, then you need to explicitly set the center argument, like this:

leveneTest( y = my.anova, center = mean )## Levene's Test for Homogeneity of Variance (center = mean)

## Df F value Pr(>F)

## group 2 1.4497 0.2657

## 15That being said, in most cases it’s probably best to stick to the default value, since the Brown-Forsythe test is a bit more robust than the original Levene test.

14.7.2 Additional comments

Two more quick comments before I move onto a different topic. Firstly, as mentioned above, there are other ways of calling the leveneTest() function. Although the vast majority of situations that call for a Levene test involve checking the assumptions of an ANOVA (in which case you probably have a variable like my.anova lying around), sometimes you might find yourself wanting to specify the variables directly. Two different ways that you can do this are shown below:

leveneTest(y = mood.gain ~ drug, data = clin.trial) # y is a formula in this case

leveneTest(y = clin.trial$mood.gain, group = clin.trial$drug) # y is the outcome Secondly, I did mention that it’s possible to run a Levene test just using the aov() function. I don’t want to waste a lot of space on this, but just in case some readers are interested in seeing how this is done, here’s the code that creates the new variables and runs an ANOVA. If you are interested, feel free to run this to verify that it produces the same answers as the Levene test (i.e., with center = mean):

Y <- clin.trial $ mood.gain # the original outcome variable, Y

G <- clin.trial $ drug # the grouping variable, G

gp.mean <- tapply(Y, G, mean) # calculate group means

Ybar <- gp.mean[G] # group mean associated with each obs

Z <- abs(Y - Ybar) # the transformed variable, Z

summary( aov(Z ~ G) ) # run the ANOVA ## Df Sum Sq Mean Sq F value Pr(>F)

## G 2 0.0616 0.03080 1.45 0.266

## Residuals 15 0.3187 0.02125That said, I don’t imagine that many people will care about this. Nevertheless, it’s nice to know that you could do it this way if you wanted to. And for those of you who do try it, I think it helps to demystify the test a little bit when you can see – with your own eyes – the way in which Levene’s test relates to ANOVA.

14.8 Removing the homogeneity of variance assumption

In our example, the homogeneity of variance assumption turned out to be a pretty safe one: the Levene test came back non-significant, so we probably don’t need to worry. However, in real life we aren’t always that lucky. How do we save our ANOVA when the homogeneity of variance assumption is violated? If you recall from our discussion of -tests, we’ve seen this problem before. The Student -test assumes equal variances, so the solution was to use the Welch -test, which does not. In fact, Welch (1951) also showed how we can solve this problem for ANOVA too (the Welch one-way test). It’s implemented in R using the oneway.test() function. The arguments that we’ll need for our example are:

formula. This is the model formula, which (as usual) needs to specify the outcome variable on the left hand side and the grouping variable on the right hand side: i.e., something likeoutcome ~ group.data. Specifies the data frame containing the variables.var.equal. If this isFALSE(the default) a Welch one-way test is run. If it isTRUEthen it just runs a regular ANOVA.

The function also has a subset argument that lets you analyse only some of the observations and a na.action argument that tells it how to handle missing data, but these aren’t necessary for our purposes. So, to run the Welch one-way ANOVA for our example, we would do this:

oneway.test(mood.gain ~ drug, data = clin.trial)##

## One-way analysis of means (not assuming equal variances)

##

## data: mood.gain and drug

## F = 26.322, num df = 2.0000, denom df = 9.4932, p-value = 0.000134To understand what’s happening here, let’s compare these numbers to what we got earlier in Section 14.3 when we ran our original ANOVA. To save you the trouble of flicking back, here are those numbers again, this time calculated by setting var.equal = TRUE for the oneway.test() function:

oneway.test(mood.gain ~ drug, data = clin.trial, var.equal = TRUE)##

## One-way analysis of means

##

## data: mood.gain and drug

## F = 18.611, num df = 2, denom df = 15, p-value = 8.646e-05Okay, so originally our ANOVA gave us the result , whereas the Welch one-way test gave us . In other words, the Welch test has reduced the within-groups degrees of freedom from 15 to 9.49, and the -value has increased from 18.6 to 26.32.

14.9 Checking the normality assumption

Testing the normality assumption is relatively straightforward. We covered most of what you need to know in Section 13.9. The only thing we really need to know how to do is pull out the residuals (i.e., the values) so that we can draw our QQ plot and run our Shapiro-Wilk test. First, let’s extract the residuals. R provides a function called residuals() that will do this for us. If we pass our my.anova to this function, it will return the residuals. So let’s do that:

my.anova.residuals <- residuals( object = my.anova ) # extract the residualsWe can print them out too, though it’s not exactly an edifying experience. In fact, given that I’m on the verge of putting myself to sleep just typing this, it might be a good idea to skip that step. Instead, let’s draw some pictures and run ourselves a hypothesis test:

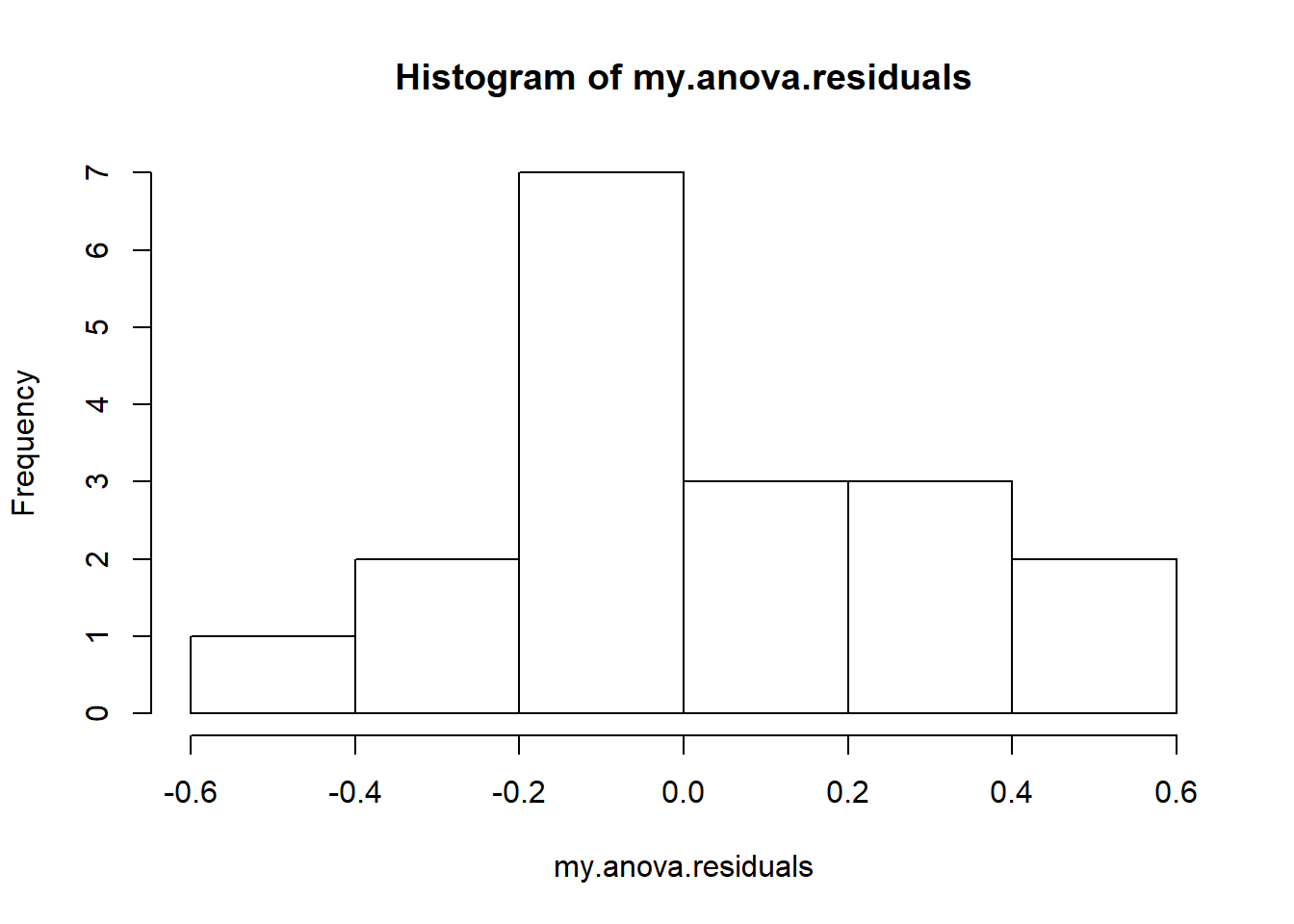

hist( x = my.anova.residuals ) # plot a histogram (similar to Figure @ref{fig:normalityanova}a)

qqnorm( y = my.anova.residuals ) # draw a QQ plot (similar to Figure @ref{fig:normalityanova}b)

shapiro.test( x = my.anova.residuals ) # run Shapiro-Wilk test##

## Shapiro-Wilk normality test

##

## data: my.anova.residuals

## W = 0.96019, p-value = 0.6053The histogram and QQ plot are both look pretty normal to me.213 This is supported by the results of our Shapiro-Wilk test (, ) which finds no indication that normality is violated.

14.10 Removing the normality assumption

Now that we’ve seen how to check for normality, we are led naturally to ask what we can do to address violations of normality. In the context of a one-way ANOVA, the easiest solution is probably to switch to a non-parametric test (i.e., one that doesn’t rely on any particular assumption about the kind of distribution involved). We’ve seen non-parametric tests before, in Chapter 13: when you only have two groups, the Wilcoxon test provides the non-parametric alternative that you need. When you’ve got three or more groups, you can use the Kruskal-Wallis rank sum test (Kruskal and Wallis 1952). So that’s the test we’ll talk about next.

14.10.1 The logic behind the Kruskal-Wallis test

The Kruskal-Wallis test is surprisingly similar to ANOVA, in some ways. In ANOVA, we started with , the value of the outcome variable for the th person in the th group. For the Kruskal-Wallis test, what we’ll do is rank order all of these values, and conduct our analysis on the ranked data. So let’s let refer to the ranking given to the th member of the th group. Now, let’s calculate , the average rank given to observations in the th group: and let’s also calculate , the grand mean rank: Now that we’ve done this, we can calculate the squared deviations from the grand mean rank . When we do this for the individual scores – i.e., if we calculate – what we have is a “nonparametric” measure of how far the -th observation deviates from the grand mean rank. When we calculate the squared deviation of the group means from the grand means – i.e., if we calculate – then what we have is a nonparametric measure of how much the group deviates from the grand mean rank. With this in mind, let’s follow the same logic that we did with ANOVA, and define our ranked sums of squares measures in much the same way that we did earlier. First, we have our “total ranked sums of squares”: and we can define the “between groups ranked sums of squares” like this: So, if the null hypothesis is true and there are no true group differences at all, you’d expect the between group rank sums to be very small, much smaller than the total rank sums . Qualitatively this is very much the same as what we found when we went about constructing the ANOVA -statistic; but for technical reasons the Kruskal-Wallis test statistic, usually denoted , is constructed in a slightly different way: and, if the null hypothesis is true, then the sampling distribution of is approximately chi-square with degrees of freedom (where is the number of groups). The larger the value of , the less consistent the data are with null hypothesis, so this is a one-sided test: we reject when is sufficiently large.

14.10.2 Additional details

The description in the previous section illustrates the logic behind the Kruskal-Wallis test. At a conceptual level, this is the right way to think about how the test works. However, from a purely mathematical perspective it’s needlessly complicated. I won’t show you the derivation, but you can use a bit of algebraic jiggery-pokery214 to show that the equation for can be rewritten as It’s this last equation that you sometimes see given for . This is way easier to calculate than the version I described in the previous section, it’s just that it’s totally meaningless to actual humans. It’s probably best to think of the way I described it earlier… as an analogue of ANOVA based on ranks. But keep in mind that the test statistic that gets calculated ends up with a rather different look to it than the one we used for our original ANOVA.

But wait, there’s more! Dear lord, why is there always more? The story I’ve told so far is only actually true when there are no ties in the raw data. That is, if there are no two observations that have exactly the same value. If there are ties, then we have to introduce a correction factor to these calculations. At this point I’m assuming that even the most diligent reader has stopped caring (or at least formed the opinion that the tie-correction factor is something that doesn’t require their immediate attention). So I’ll very quickly tell you how it’s calculated, and omit the tedious details about why it’s done this way. Suppose we construct a frequency table for the raw data, and let be the number of observations that have the -th unique value. This might sound a bit abstract, so here’s the R code showing a concrete example:

f <- table( clin.trial$mood.gain ) # frequency table for mood gain

print(f) # we have some ties##

## 0.1 0.2 0.3 0.4 0.5 0.6 0.8 0.9 1.1 1.2 1.3 1.4 1.7 1.8

## 1 1 2 1 1 2 1 1 1 1 2 2 1 1Looking at this table, notice that the third entry in the frequency table has a value of . Since this corresponds to a mood.gain of 0.3, this table is telling us that two people’s mood increased by 0.3. More to the point, note that we can say that f[3] has a value of 2. Or, in the mathematical notation I introduced above, this is telling us that . Yay. So, now that we know this, the tie correction factor (TCF) is: The tie-corrected value of the Kruskal-Wallis statistic obtained by dividing the value of by this quantity: it is this tie-corrected version that R calculates. And at long last, we’re actually finished with the theory of the Kruskal-Wallis test. I’m sure you’re all terribly relieved that I’ve cured you of the existential anxiety that naturally arises when you realise that you don’t know how to calculate the tie-correction factor for the Kruskal-Wallis test. Right?

14.10.3 How to run the Kruskal-Wallis test in R

Despite the horror that we’ve gone through in trying to understand what the Kruskal-Wallis test actually does, it turns out that running the test is pretty painless, since R has a function called kruskal.test(). The function is pretty flexible, and allows you to input your data in a few different ways. Most of the time you’ll have data like the clin.trial data set, in which you have your outcome variable mood.gain, and a grouping variable drug. If so, you can call the kruskal.test() function by specifying a formula, and a data frame:

kruskal.test(mood.gain ~ drug, data = clin.trial)##

## Kruskal-Wallis rank sum test

##

## data: mood.gain by drug

## Kruskal-Wallis chi-squared = 12.076, df = 2, p-value = 0.002386A second way of using the kruskal.test() function, which you probably won’t have much reason to use, is to directly specify the outcome variable and the grouping variable as separate input arguments, x and g:

kruskal.test(x = clin.trial$mood.gain, g = clin.trial$drug)##

## Kruskal-Wallis rank sum test

##

## data: clin.trial$mood.gain and clin.trial$drug